SpeechKit 12

Liberate the Desktop

Chant SpeechKit

You really don’t have to sit in front of a computer with a mouse and keyboard to use information technology. Your applications can be enhanced to speak and listen to you from where ever you need them to.

Speech recognition is the process of converting an acoustic signal (i.e. audio data), captured by a microphone or a telephone, to a set of words. These words can be used for controlling computer functions, data entry, and application processing.

Speech synthesis is the process of converting words to phonetic and prosodic symbols and generating synthetic speech audio data. Synthesized speech can be used for answering questions, event notification, and reading documents aloud.

What is Speech Management?

Speech management enables you to:

- control application functions by speaking rather than having to use a mouse or keyboard,

- capture data by speaking rather than typing, and

- prompt and confirm data capture with spoken or audio acknowledgement.

Applications benefits include:

- enhanced speed and accuracy of data capture,

- added flexibility of running applications in a variety of environments, and

- expanded operating scenarios for hands-free computing.

What is SpeechKit?

Chant SpeechKit handles the complexities of speech recognition and speech synthesis to minimize the programming necessary to develop software that speaks and listens.

It simplifies the process of managing Apple Speech,Google android.speech, Microsoft Azure Speech, Microsoft SAPI 5, Microsoft Speech Platform, Microsoft WindowsMedia (UWP and WinRT), and Nuance Dragon NaturallySpeaking recognizers, and managing Acapela, Apple AVFoundation, Cepstral, CereProc, Google android.speech.tts, Microsoft Azure Speech, Microsoft SAPI 5, Microsoft Speech Platform, and Microsoft WindowsMedia (UWP and WinRT) synthesizers.

SpeechKit includes Android, C++, C++Builder, Delphi, Java,.NET Framework, Objective-C (iOS and macOS), and Swift (iOS and macOS) class libraries to support all your programming languages and sample projects for popular IDEs—such as the latest Visual Studio from Microsoft, RAD Studio from Embarcadero, Android Studio from Google, Java IDEs Eclipse, IntelliJ, JDeveloper, and NetBeans, and Xcode from Apple.

The class libraries can be integrated with 32-bit and 64-bit applications for Android, iOS, macOS, and Windows platforms.

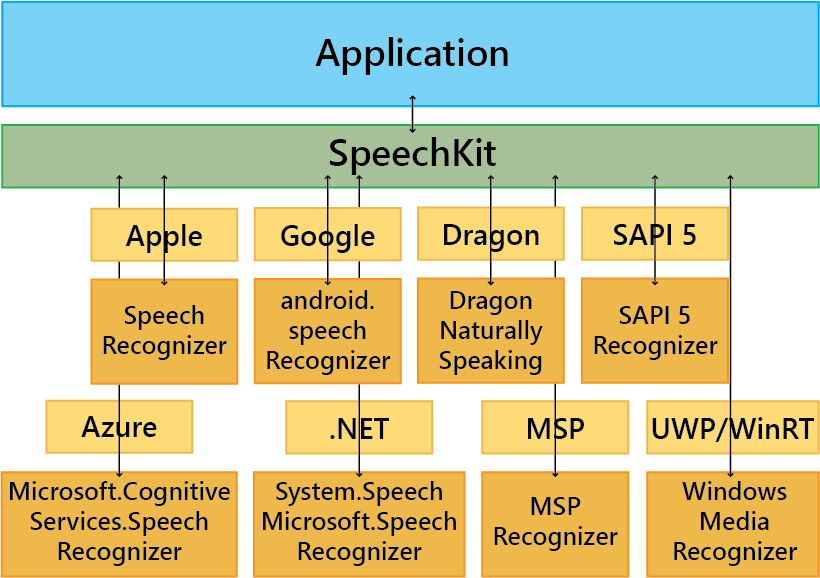

Speech Recognition and Synthesis Architecture

SpeechKit provides a productive way to develop software that listens. Applications set properties and invokes methods through a speech recognition management class. This class handles the low-level functions with speech recognition engines (i.e., recognizers).

Applications establish a session with a recognizer through which spoken language captured live via a microphone or from recorded audio is processed and converted to text. Applications use SpeechKit to manage the activities for speech recognition on their behalf. SpeechKit manages the resources and interacts directly with the speech application program interface (API). SpeechKit supports the following speech APIs for speech recognition:

- Apple Speech,

- Google android.speech,

- Microsoft Azure Speech,

- Microsoft SAPI 5,

- Microsoft Speech Platform,

- Microsoft System.Speech,

- Microsoft Microsoft.Speech,

- Microsoft WindowsMedia, and

- Nuance Dragon NaturallySpeaking.

Applications receive recognized speech as text and notification of other processing states through event callbacks.

SpeechKit Architecture for Speech Recognition

SpeechKit encapsulates all of the technologies necessary to make the process of recognizing speech simple and efficient for applications.

SpeechKit simplifies the process of recognizing speech by handling the low-level activities directly with a recognizer.

Instantiate SpeechKit to recognize speech within the application and destroy SpeechKit to release its resources when speech recognition is no longer needed.

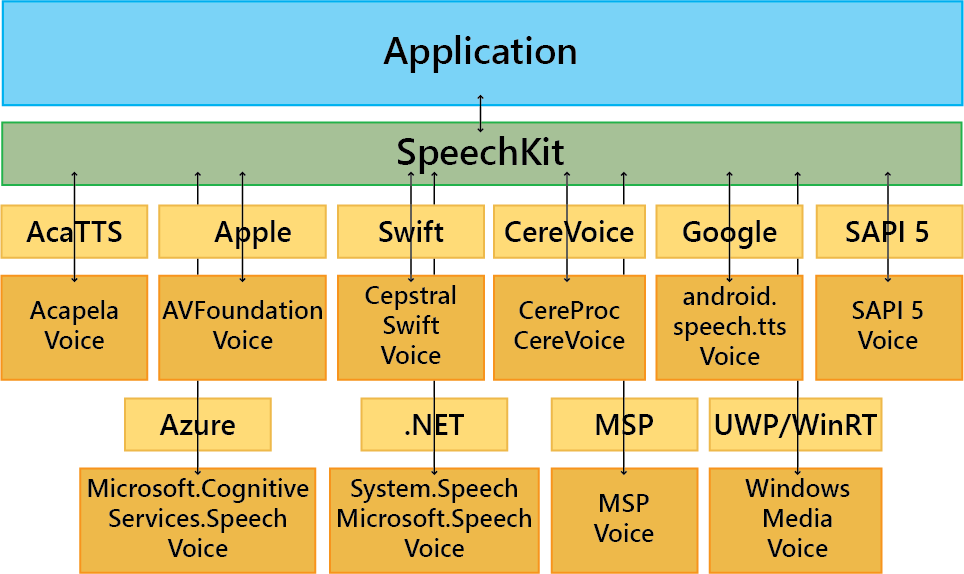

Speech Synthesis Management

SpeechKit provides a productive way to develop software that speaks. Applications set properties and invoke methods through the speech synthesis management class. This class handles the low-level functions with text-to-speech engines (i.e., synthesizers or voices).

Applications establish a session with a synthesizer through which speech is synthesized from text. Applications use SpeechKit to manage the synthesizer resources on their behalf. SpeechKit manages the resources and interacts directly with a speech application program interface (API). SpeechKit supports the following speech APIs for speech synthesis:

- Acapela TTS,

- Apple AVFoundation,

- Cepstral Swift,

- CereProc CereVoice,

- Google android.speech.tts,

- Microsoft Azure Speech,

- Microsoft SAPI 5,

- Microsoft Speech Platform,

- Microsoft System.Speech,

- Microsoft Microsoft.Speech, and

- Microsoft WindowsMedia.

Application receive notification of other processing states through event callbacks.

SpeechKit Architecture for Speech Synthesis

The ChantTTS class encapsulates all of the technologies necessary to make the process of synthesizing speech simple for your application. Optionally, it can save the session properties for your application to ensure they persist across application invocations.

SpeechKit simplifies the process of synthesizing speech by handling the low-level activities directly with a synthesizer.

Instantiate SpeechKit to synthesize speech within the application and destroy SpeechKit to release its resources when speech synthesis is no longer needed.

Feature Summary

Chant SpeechKit handles the complexities of speech recognition and speech synthesis. The classes minimize the programming efforts necessary to construct software that speaks and listens.

A SpeechKit application can:

- Control application functions by speaking rather than having to use a mouse or keyboard;

- Prompt users for applicable data capture;

- Capture data by speaking rather than typing;

- Confirm data capture with spoken or audio acknowledgement;

- Transcribe audio files to text; and

- Synthesize speech to files.

Recognizers are accessed via proprietary application programming interfaces (APIs). SpeechKit supports the following speech APIs for speech recognition:

| Platforms | Platforms |

|---|---|

| Apple Speech | ARM, x64, x86 |

| Google android.speech | ARM |

| Microsoft Azure Speech | ARM, x64, x86 |

| Microsoft SAPI 5 | x64, x86 |

| Microsoft Speech Platform | x64, x86 |

| Microsoft .NET System.Speech | x64, x86 |

| Microsoft .NET Microsoft.Speech | x64, x86 |

| Microsoft WindowsMedia (UWP) | ARM, x64, x86 |

| Microsoft WindowsMedia (WinRT) | x86, x64 |

| Nuance Dragon NaturallySpeaking | x86, x64 |

Synthesizers are accessed via proprietary application programming interfaces (APIs). SpeechKit supports the following speech APIs for speech synthesis:

| Speech API | Platforms |

|---|---|

| Acapela TTS | x64, x86 |

| Apple AVFoundation | ARM, x64, x86 |

| Cepstral Swift | x64, x86 |

| CereProc CereVoice | x64, x86 |

| Google android.speech.tts | ARM |

| Microsoft Azure Speech | ARM, x64, x86 |

| Microsoft SAPI 5 | x64, x86 |

| Microsoft Speech Platform | x64, x86 |

| Microsoft .NET System.Speech | x64, x86 |

| Microsoft .NET Microsoft.Speech | x64, x86 |

| Microsoft WindowsMedia (UWP) | ARM, x64, x86 |

| Microsoft WindowsMedia (WinRT) | x86, x64 |

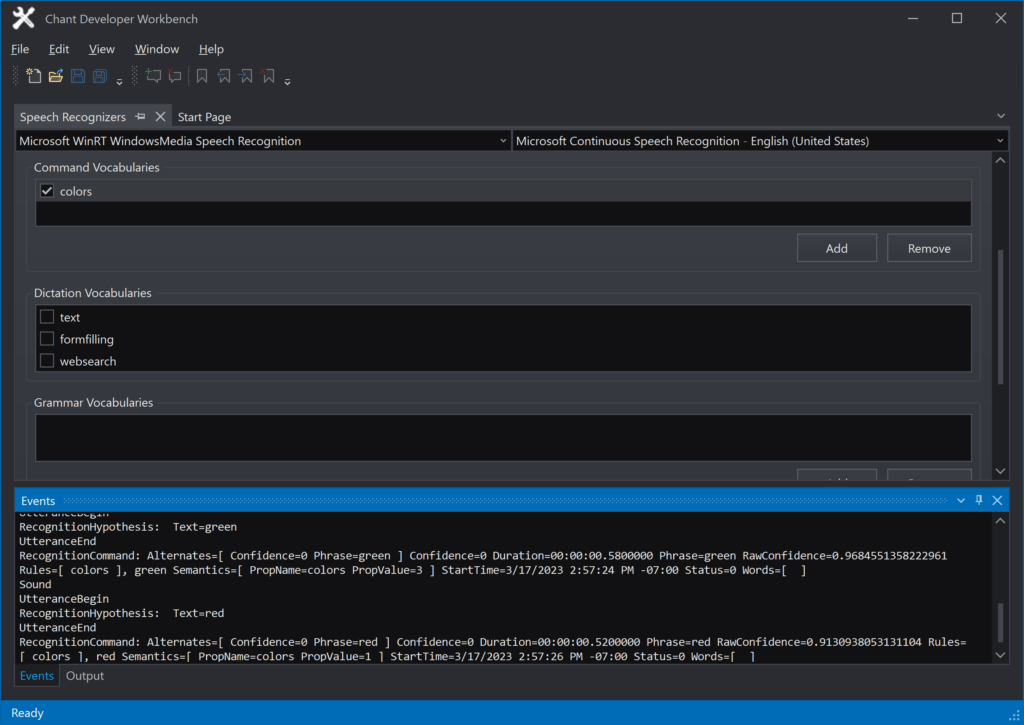

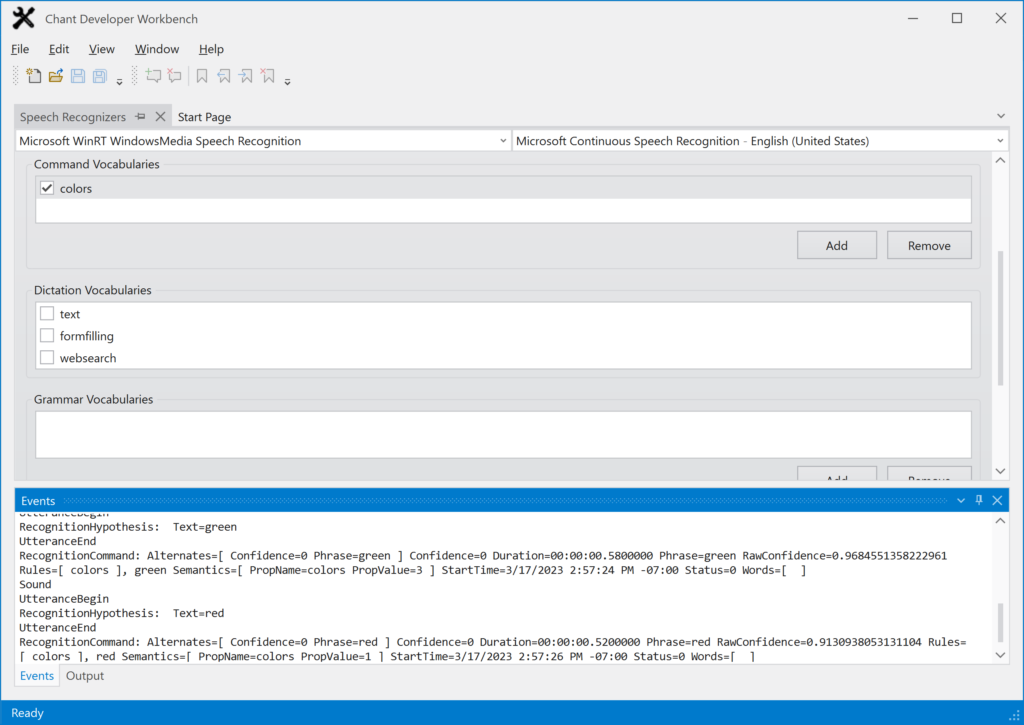

Within Chant Developer Workbench, you can:

- Enumerate speech engines for testing recognizer-, and synthesizer-specific features;

- Trace recognition and synthesis events;

- Support grammar activation and testing; and

- Support TTS markup playback.

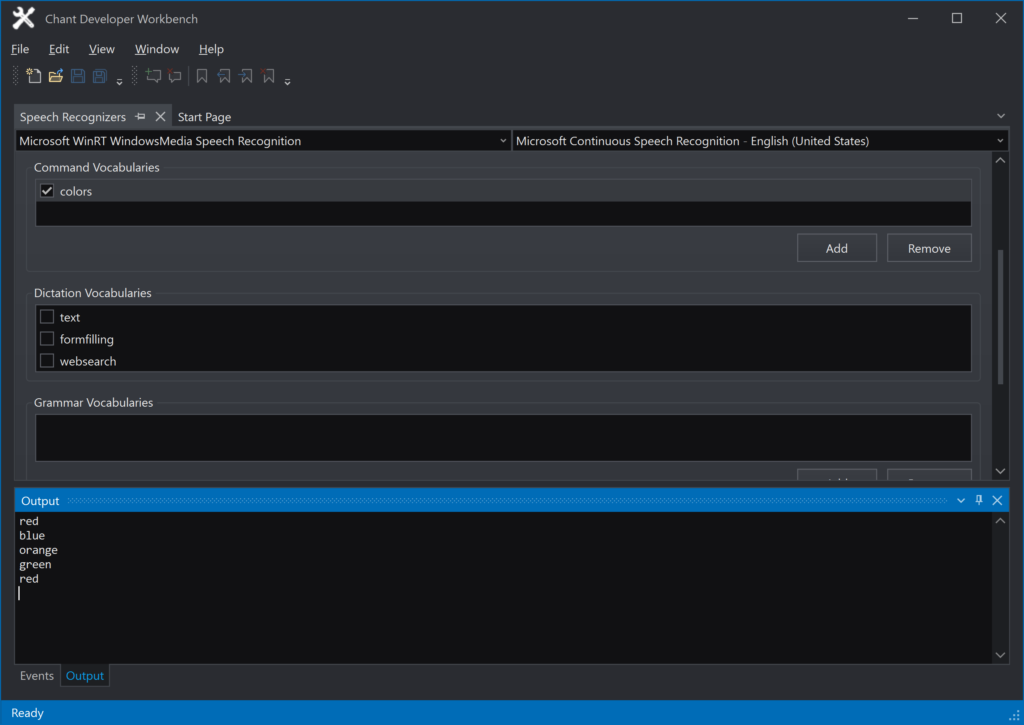

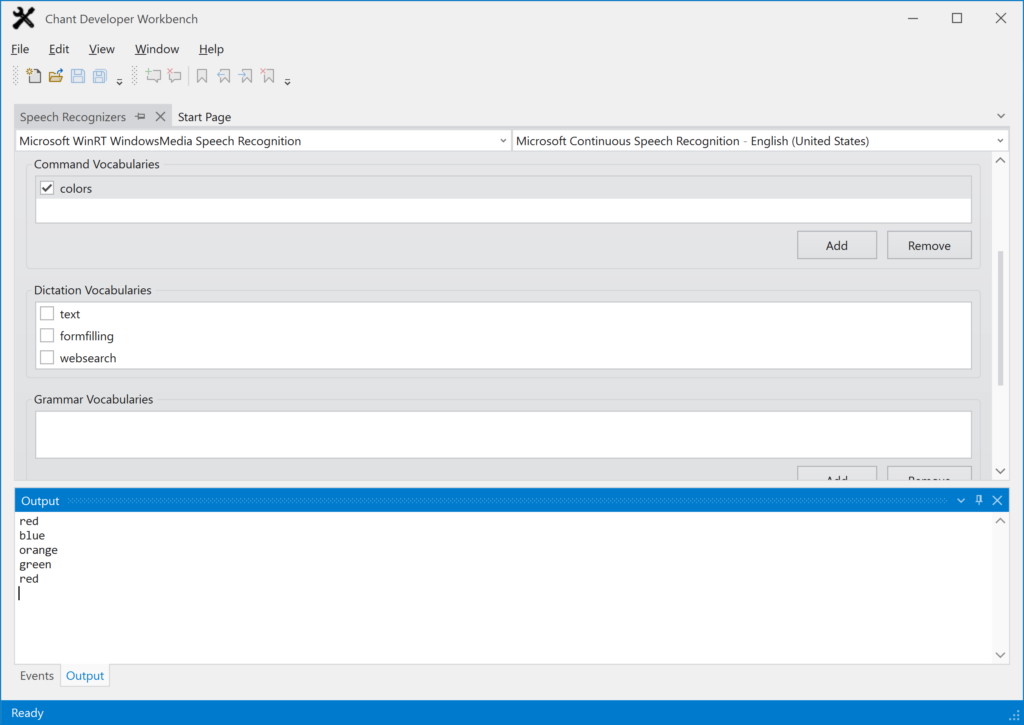

Recognizer Management: Enumerate and test recognizers with command, dictation, and grammar vocabularies.

Recognition Events: Recognize speech from a microphone and prerecorded audio. Trace recognition events in the Events window.

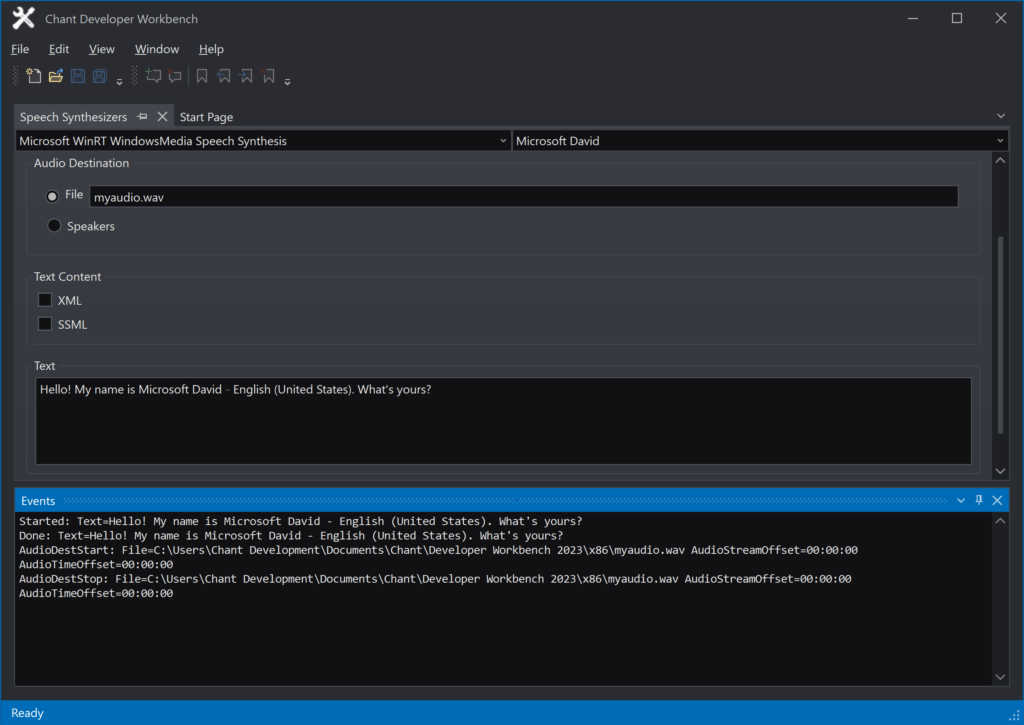

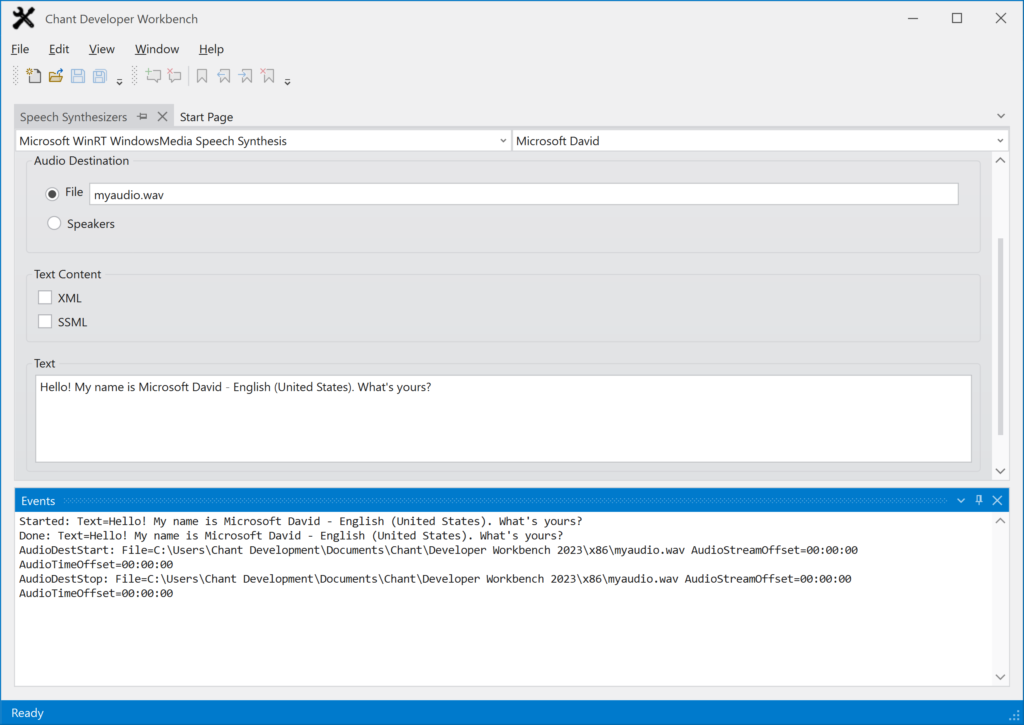

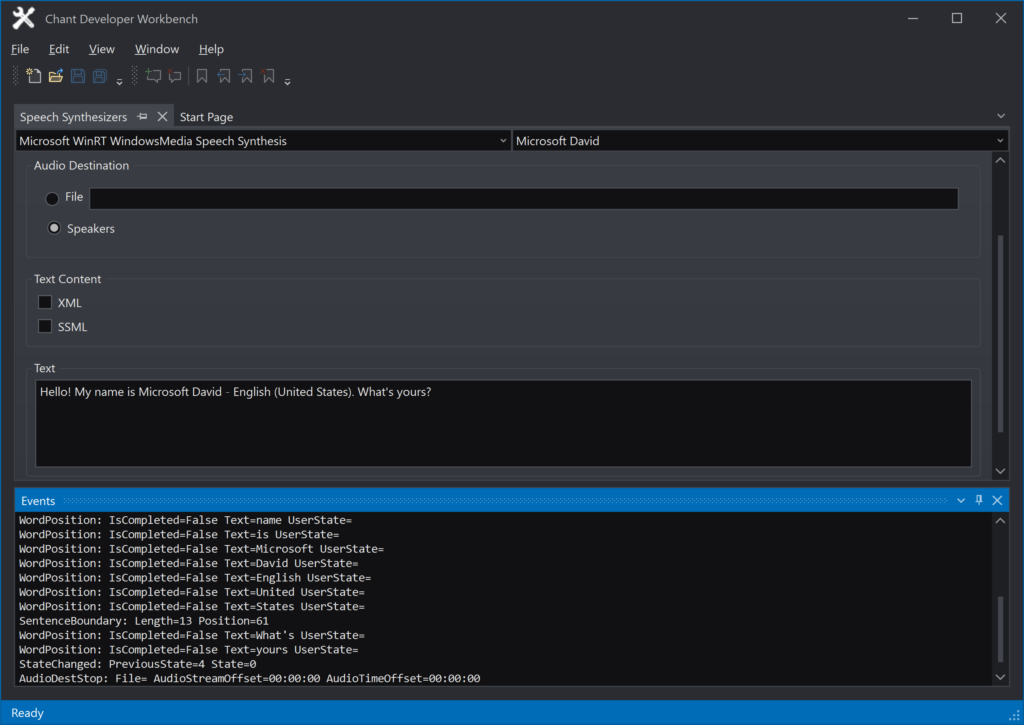

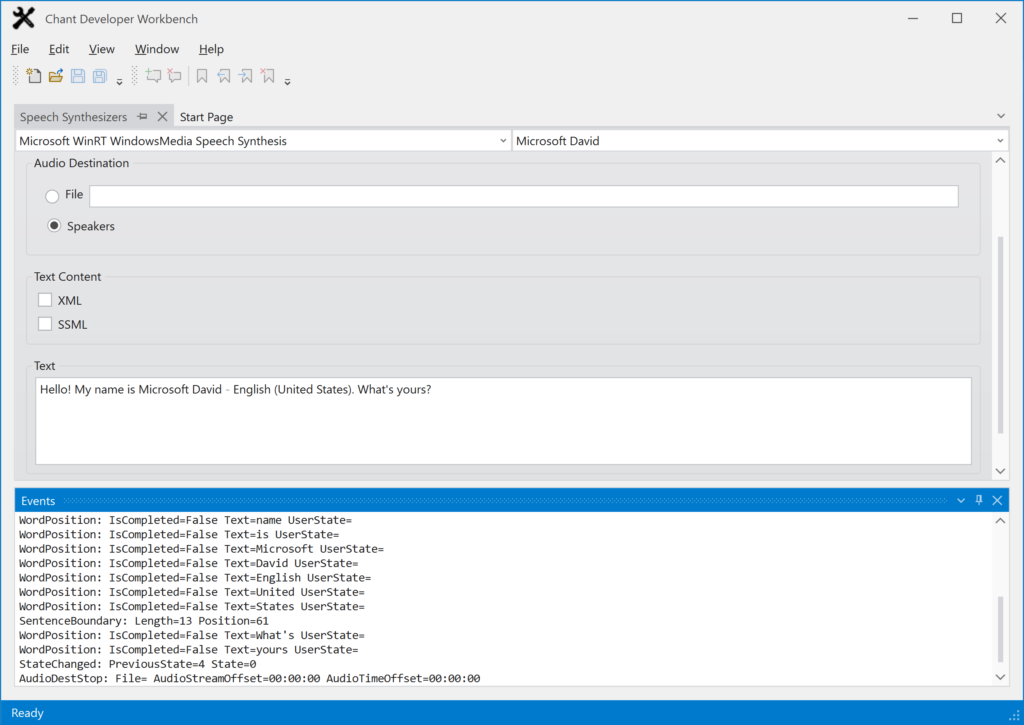

Synthesizer Management: Enumerate and test synthesizers with live playback or persisting synthesized speech to files.

Synthesis Events: Trace synthesis events in the Events window.

SpeechKit License

You may explore the capabilities of Chant SpeechKit for 30 days. To continue to use the product after 30 days, you must purchase a license for the software or stop using the software and remove it from your system.

A valid purchased license gives you the right to construct executable applications that use the applicable class library and distribute it with executable applications without royalty obligations to Chant.

The Chant SpeechKit license is a single end-user license. Each developer who installs and uses SpeechKit to develop applications must have their own license.

SpeechKit class library names vary by platform: Windows 32-bit and 64-bit. This helps ensure the correct library is deployed with your application.

Chant SpeechKit is licensed separately or as part of Chant Developer Workbench. You may purchase a license for Chant SpeechKit on-line at the Chant store or through your preferred software reseller.

SpeechKit System Requirements

Development Environment

- Intel processor or equivalent,

- Microsoft Windows 10, 11,

- Apple MacOS Catalina+,

- 120 MB of hard drive space,

- CD-ROM drive,

- VGA or higher-resolution monitor,

- Apple Speech, Google android.speech, Microsoft Azure Speech, Microsoft SAPI 5 compatible, Microsoft Speech Platform, Microsoft WindowsMedia (UWP and WinRT), or Nuance Dragon NaturallySpeaking recognizer,

- Acapela, Apple AVFoundation, Cepstral, CereProc, Google android.speech.tts, Microsoft Azure Speech, Microsoft SAPI 5, Microsoft Speech Platform, or Microsoft WindowsMedia (UWP and WinRT) synthesizer,

- Android, C++, C++Builder, Delphi, Java (JDK 1.8, 11, 13, 14, 15, 16, 17, 18, 19), .NET Framework (4.5+, 3.1, 5.0, 6.0, 7.0), Objective-C, Swift development environments, and

- Close-talk microphone.